The European Union has implemented its new Artificial Intelligence Act, but what does that entail exactly?

Well, the new rules that came into force on Aug. 1 will impact tech developers differently depending on the level of risk associated with the programs they have created. European authorities have divided them into four categories: minimal risk, specific transparency risk, high risk and unacceptable risk.

Those in the first category include companies that use AI-powered spam filters or video games. No restrictions will be placed on them.

Companies deemed to have specific transparency risk, such as those operating chatbots, must inform users that they are interacting with a machine. Moreover, they are required to properly label AI-generated content they create.

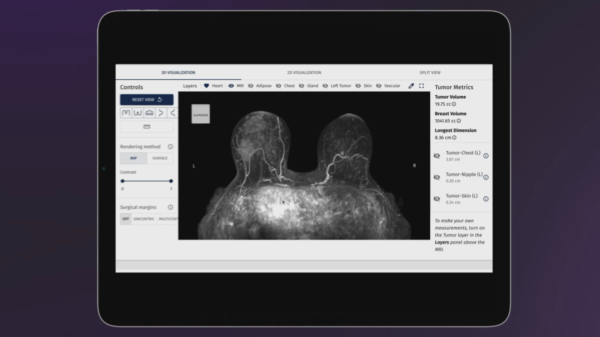

High risk system developers, like companies that use AI-integrated medical software, must use meticulous risk mitigation systems while providing high-grade data sets and user information. Other high risk systems include those used for law enforcement, employment, automotive, judicial or migration purposes.

And lastly, those creating tech programs with an unacceptable level of risk will face legal consequences. Any AI that is considered to be a threat to human rights is effectively banned.

Prohibited AI uses include creating facial recognition databases through sourcing web content and CCTV footage, or anything that exploits a person’s vulnerabilities and circumstances to cause them harm.

A slow 24-month process; complaints inevitable

According to ChatGPT creator OpenAI and others, the new provisions for most high-risk AI systems won’t be fully applicable until 2026. “OpenAI is committed to complying with the EU AI Act and we will be working closely with the new EU AI Office as the law is implemented in the coming months,” the prominent industry influence specified.

Companies operating in the European sector, such as Germany’s Aleph Alpha, VERSES AI Inc. (CBOE: VERS) (OTCQB: VRSSF) in the Netherlands, or the French developer Mistral AI, will need to pay close attention to the evolving framework of rules too.

On another note, some have complained that there are major flaws in how the new AI Act is currently laid out.

“We regret that the final Act contains several big loopholes for biometrics, policing and national security, and we call on lawmakers to close these gaps,” a spokesperson for European Digital Rights told Euronews Next.

European Parliament members approved the legislation in March before publishing it in the European Commission’s official journal last month. The European Commission originally proposed the new legal system in 2021.

The AI Act enters into force today, with responsibilities for #EDPS as “notified body” and “market surveillance authority” of high risk AI systems developed and used by EUIs. @W_Wiewiorowski remains committed to protecting fundamental rights.

Watch video 👇👇👇 pic.twitter.com/NAIXx6cRgX

— EDPS (@EU_EDPS) August 1, 2024

Read more: VERSES crafts trail blazing research report on next-gen AI

Read more: VERSES AI levels up with global standards for intelligent system interoperability

AI Act won’t just impact the EU

The broad scope of work involved with it will be an immense undertaking. Currently, the new rules are quite vague. Although this is a significant milestone, implementation and refinement of these restrictions and regulations will be a lengthy gradual process.

It is also notable that it will not be prohibited to produce and export AI systems in the EU that will be used abroad where the rules do not apply. That is according to the Center for Security and Emerging Technology, a think tank headquartered at Georgetown University in Washington D.C.

These rules won’t be applicable to AI used for certain purposes within the EU as well, the think tank says. Namely, AI used for military and national security purposes, scientific studies or personal applications.

Others say the AI Act’s establishment will have broad implications outside of the European Union.

“It applies to any organization with any operation or impact in the EU,” Charlie Thompson, a representative from Appian Corp (Nasdaq: APPN), told CNBC, “which means the AI Act will likely apply to you no matter where you’re located.”

“This will bring much more scrutiny on tech giants when it comes to their operations in the EU market and their use of EU citizen data,” he added.

VERSES AI is a sponsor of Mugglehead news coverage

rowan@mugglehead.com