Education technology (EdTech) companies are making money on the backs of American children, according to the American Civil Liberties Union.

In October, the union released a report called “Digital Dystopia: The Danger in Buying What the EdTech Surveillance Industry is Selling” wherein it delved into the booming multi-billion-dollar EdTech surveillance industry.

The report highlights the harmful impacts these invasive and largely ineffective products have on students. It also examines the deceptive marketing claims made by popular EdTech surveillance companies and dissects how they falsely convince schools that their products are needed to keep students safe. The report finds companies accomplish this by exploiting educators’ fears and making unsubstantiated efficacy claims.

Surveillance in schools particularly endangers students who are members of the LGBTQ community, students of color, students with disabilities, low-income students and students who are undocumented or have undocumented family members, according to the report.

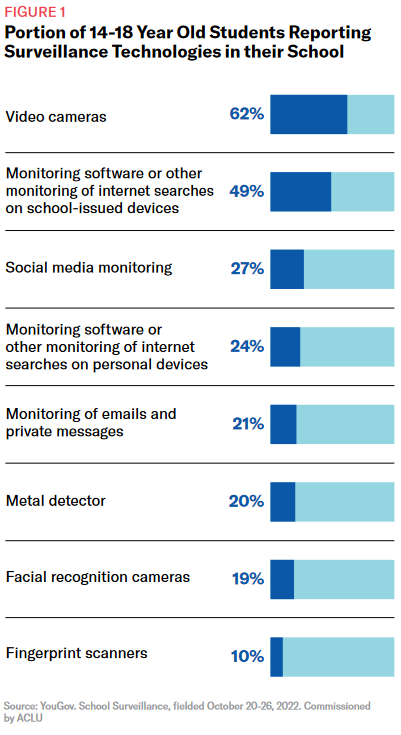

The ACLU identified 10 surveillance technologies being sold to and used by schools in 2023. A few of these include surveillance cameras, facial recognition software, social media monitoring software and online monitoring and web filtering.

“Unchecked, these companies have created massive demand for their products based on unsubstantiated claims that surveillance deters harmful conduct and keeps students safe,” said Chad Marlow, ACLU senior policy counsel and principal author of the report.

“But we know from the findings in this report that EdTech surveillance companies are using fear-based tactics to sell surveillance products that not only fail to keep our kids safe but actually increase discrimination, invade students’ privacy, and erode trust between students and educators.”

The report uses both qualitative and quantitative methods including reviewing existing research and scholarship, investigating EdTech industry products and practices. It also audits school shooting incidents to assess the effectiveness of safety measures. The study cites original ACLU research through student focus groups, and a national YouGov survey of 502 students aged 14-18.

Nearly all students in the focus groups indicated that school surveillance had a negative impact on their interactions with school staff, communication with friends, online and social media activity, consideration of joining groups or clubs and their overall feelings at school.

In 2021 alone, U.S. schools spent an estimated $3.1 billion on school surveillance products that:

▪️ Increase discrimination against marginalized students

▪️ Violate students’ privacy

▪️ Erode trust between students and educatorsAmong other harmful impacts.

— ACLU (@ACLU) October 3, 2023

Read more: Artificial intelligence can pose a serious threat if unregulated: Ontario’s privacy commissioner

Read more: AI may outsmart people within two years, predicts U.K. government advisor

Study shows surveillance technologies cause harm to students

The study found that false marketing from EdTech companies resulted in the misuse of school resources.

In 2021, K-12 schools and colleges in the United States increased their spending on security products and services to an estimated USD$3.1 billion, up from USD$2.7 billion in 2017. The efficacy claims made by the EdTech surveillance industry are primarily based on opinion-based evaluations and unsubstantiated, unverifiable statements and figures.

There was also a significant lack of independent, unbiased evaluations that establish the effectiveness of these products.

Furthermore, claims made by EdTech companies regarding the efficacy of their products, particularly their assertions of preventing school  shootings and suicides, lack a solid foundation.

shootings and suicides, lack a solid foundation.

These claims are either unfounded or impossible to verify due to a lack of current research. In some cases, the claims contradict the limited independent studies that indicate surveillance does not effectively reduce incidents of violence in schools. This deceptive marketing not only misleads educational institutions but also diverts valuable resources away from more productive and evidence-based initiatives.

The EdTech Surveillance industry’s strategy is to persuade schools that the availability of government-funded grants reduces the immediate costs for schools. The industry argue that, though the benefits of these products are in doubt, there’s little risk to schools for their acquisition and use.

Except that’s not quite the case.

The study also found that school surveillance increases the risk of discrimination against students. This is evidenced by findings from various sources.

In the ACLU’s national survey, it was found that almost 1 in 5 students aged 14-18 expressed concern that school surveillance could potentially be used against immigrant students. Additionally, approximately 1 in 5 students reported concerns that surveillance technology might be used to identify students seeking reproductive health care, including abortion and those seeking gender-affirming care.

A majority of students aged 14-18 reported that their schools used surveillance technology to monitor their behaviors.

Among them, 24 per cent expressed concerns about how school surveillance limits their access to online resources. Another 17 per cent had worries about the restrictions it placed on their online speech. Additionally, a quarter of students were concerned about how surveillance could be used for disciplinary purposes against them or their friends, while 22 per cent feared that it could be shared with law enforcement.

Read more: Elon Musk and other industry experts sign open letter urging temporary pause on AI development

Read more: AI video production market to reach US$1.5B by 2028: Research and Markets

School surveillance is a USD$3.1B industry

Contrary to the belief that school surveillance enhances safety, multiple studies and investigations have shown otherwise.

In a nationally representative sample of 850 school districts conducted by University of Louisville researchers in 2021, there was no evidence of a difference in outcomes related to school crime between schools with security cameras and those without.

Meanwhile, an audit of K-12 school mass shootings over the past two decades revealed that surveillance cameras were present in 8 of the 10 deadliest schools that experienced these shootings, yet they did not prevent these tragic incidents. A U.S. Secret Service investigation also concluded that social media monitoring played a minimal role in thwarting planned school shootings.

“The $3.1 billion EdTech Surveillance industry, which is expected to grow by more than 8 per cent annually on average, is not primarily in the business of keeping kids safe,” says the report’s conclusion.

“Its industry is not comprised of nonprofit companies, and it is not driven by the pursuit of the public good above all else. Its primary goal is to make money. Lots of it.”

Three of the companies listed in the report include GoGuardian, Gaggle and Securly.

GoGuardian introduced Beacon in 2018, which is a software program designed to be installed on school computers. Beacon analyzes students’ browsing behavior and promptly alerts individuals who are concerned about students at risk of suicide or self-harm.

Founded in 1999, Gaggle is an American student digital safety company headquartered in Dallas, Texas. The company collaborates with K-12 districts to oversee student safety on school-provided technology accounts.

Critics have accused Gaggle of excessively surveilling students and of allegedly breaching consent and privacy. In a report published by the Electronic Frontier Foundation, the author criticized student monitoring software, including Gaggle, for potentially flagging ambiguous terms incorrectly and for the possibility of revealing personal information about students, particularly in relation to LGBTQ+ identities.

In January 2023, Gaggle took action by removing LGBTQ+ related terms from its list of phrases that could trigger automatic flags for students who use them.

Securly, Inc. focuses on developing and selling internet filters and various technologies utilized by primary and secondary schools to monitor students’ web browsing, web searches, video watching, social media posts, emails, online documents and drives.

Read more: Twelve B.C. Canadian Tire stores used facial recognition tech to track customers: privacy report

Read more: Canada’s privacy watchdog launches investigation into OpenAI

China has been directly surveiling students since 2019

The United States isn’t the only country in the world implementing these surveillance measures.

The classroom has emerged as a new battleground in Beijing’s extensive efforts to surveil its citizens. Facial recognition is employed to evaluate attendance and attentiveness, while AI robots engage with kindergarteners. However, there are now signs of growing unease as these uses have become ubiquitous, all without any regulatory framework governing the collection and utilization of this data.

For example, China experimented with a headband in 2019 to help monitor children’s brainwaves and help improve focus.

The company behind the headband, US-based startup BrainCo, claimed that the Focus1, or Fu Si, headband would only be used to measure how closely students are paying attention through electrodes that detect electrical activity in kids’ brains and send the data to teachers’ computers or a mobile app.

The headband featured a light that gleamed red, yellow, or blue, to signal how engaged a child is with the task at hand, with red indicating the highest level of attention.

In April 2019, the product stirred unease when photos and footage of primary school students in one Chinese province wearing it began to circulate widely online. Now, the product is available for retailing at about 3,200 to 14,000 yuan (approximately USD$450-USD$2,000) on the country’s biggest e-commerce sites.

Some scientists have voiced concerns about the accuracy and ethical implications of using brain signal data in this manner.

Chinese engineer Han Bicheng, who founded BrainCo in Massachusetts in 2015 and invented the headwear, has defended the product as a tool to aid students in enhancing their test scores rather than as a form of surveillance.

Sandra Loo, a professor of psychiatry at the University of California, Los Angeles, argues that EEG technology lacks the sophistication to accurately consider variables like neurodiversity. According to her, this can result in misleading assumptions about an individual student’s performance or requirements.

“Even in resting EEG, there are different subgroups of brain activity. It’s not just ADHD; there is variability in normal kids,” says Loo, pediatric neuropsychology director UCLA.

“No one is paying attention to that, instead the approach is one-size-fits-all.”

The pervasive use of surveillance technologies in educational settings as highlighted in various instances around the world raises complex ethical and practical concerns.

Proponents argue that these technologies aim to improve student engagement and safety but there is a growing unease regarding their accuracy, potential for privacy infringement, and the oversimplification of complex aspects of human behaviour and learning.

As the adoption of such technologies continues to expand globally, striking a balance between their intended benefits and the potential risks they pose remains a pressing challenge for educators, policymakers and society as a whole.

.

Follow Joseph Morton on Twitter

joseph@mugglehead.com