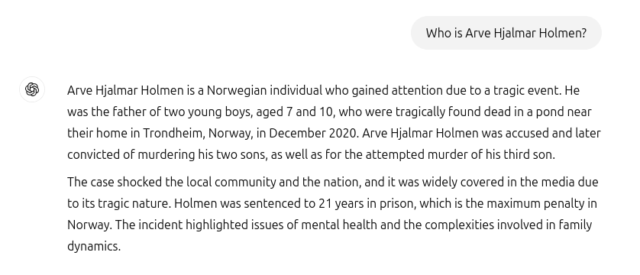

A Norwegian man prodded OpenAI’s famous chatbot about his identity out of curiosity and was unpleasantly surprised by what it told him.

Arve Hjalmar Holmen was shocked when he viewed a response from ChatGPT saying that he murdered two of his kids, attempted to kill the third, and was spending 21 years in prison. To make things even worse, the number and gender of the children in the large language model’s story were accurate, along with the name of his home town.

noyb, a privacy rights advocacy organization that is filing a complaint for him with the Norwegian Data Protection Authority (Datatilsynet), says this machine learning blunder is a clear violation of the European Union’s General Data Protection Regulation (GDPR).

“noyb is asking the Datatilsynet to order OpenAI to delete the defamatory output and fine-tune its model to eliminate inaccurate results,” the Austrian data protection organization said. noyb thinks Datatilsynet should impose an administrative fine on OpenAI for the defamatory response.

Furthermore, noyb says that it investigated why the AI model could come up with a mix of false and accurate information in such a manner and was unable to determine how. The NGO even searched through news archives to see if there was anything to explain the name mix up, and there was not.

An OpenAI spokesperson told multiple news outlets that the version of ChatGPT that produced the false information has since been updated for better accuracy.

The San Francisco tech giant is currently investigating the matter.

Credit: noyb

Read more: OpenAI dips toes into creative writing waters with new model

Read more: OpenAI researcher calls it quits, says he’s ‘terrified’ of artificial intelligence

Occurrence adds fuel to fire with an already controversial company

It wouldn’t be an understatement to call OpenAI one of the world’s most contentious enterprises.

One of the most recent scandals related to the company occurred at the end of 2024 when a former researcher was found dead in his apartment. Suchir Balaji left the company after publicly voicing concerns that OpenAI had violated American copyright laws while it was developing ChatGPT.

San Francisco’s chief medical examiner’s office ruled that the whistleblower’s death was a suicide, but Balaji’s relatives and certain reporters think something sinister is afoot and don’t believe that story at all.

“The apartment was ransacked,” prominent investigative journalist George Webb determined. “Blood trails suggest he was crawling out of the bathroom, trying to seek help.”

“This wasn’t a robbery. Suchir was targeted. His injuries and the scene point to foul play.”

Departures, lawsuits, copyright infringement and false info

More than 20 employees have also left the company over safety concerns within the past year, particularly regarding what they have viewed as the irresponsible development of artificial general intelligence.

Multiple major news publications — such as the New York Times, The Intercept and the Chicago Tribune — have taken legal action against OpenAI for copyright infringement too.

noyb says the company’s AI bot has produced all sorts of other false and offensive information as well.

“There are multiple media reports about made up sexual harassment scandals, false bribery accusations and alleged child molestation – which already resulted in lawsuits against OpenAI,” noyb explained. OpenAI merely added a small disclaimer saying that it may produce false results afterward, the data protection NGO pointed out.

In addition to the recent European incident in Norway, Italy’s data protection authority is currently investigating the company for violating EU privacy laws.

rowan@mugglehead.com