Anthropic released Opus 4.5, its newest frontier model, including a stronger coding performance and a smoother chat experience.

Released on Monday, many users will notice the model avoids abrupt conversation endings. Claude models often cut off conversations after long sessions even if users had tokens left. Opus 4.5 achieves this by automatically summarizing earlier dialogue in the background, preserving important user-chat history while discarding redundant content from long conversations that would otherwise trigger an abrupt cutoff.

Then the system drops less important messages and keeps relevant details. Consequently, chats can run longer without strangling the flow. That boost in conversational memory applies to all current Claude models in the apps.

Opus 4.5 also posts a performance milestone that may resonate strongly with engineers prioritizing code precision and reliability.

Additionally, it scored 80.9 per cent on the SWE Bench Verified benchmark, slightly above GPT 5.1-Codex-Max at 77.9 per cent. Also, it outpaced Gemini 3 Pro, from Google parent Alphabet (NASDAQ: GOOG), which hit 76.2 per cent.

The new model excelled in coding tasks and agentic tool use. However, it still lagged behind GPT 5.1 in visual reasoning on MMMU tests.

The firm added a new “effort” parameter for developers. That setting lets developers carefully tune how much effort the model expends, allowing finer trade offs between speed, accuracy and token consumption.

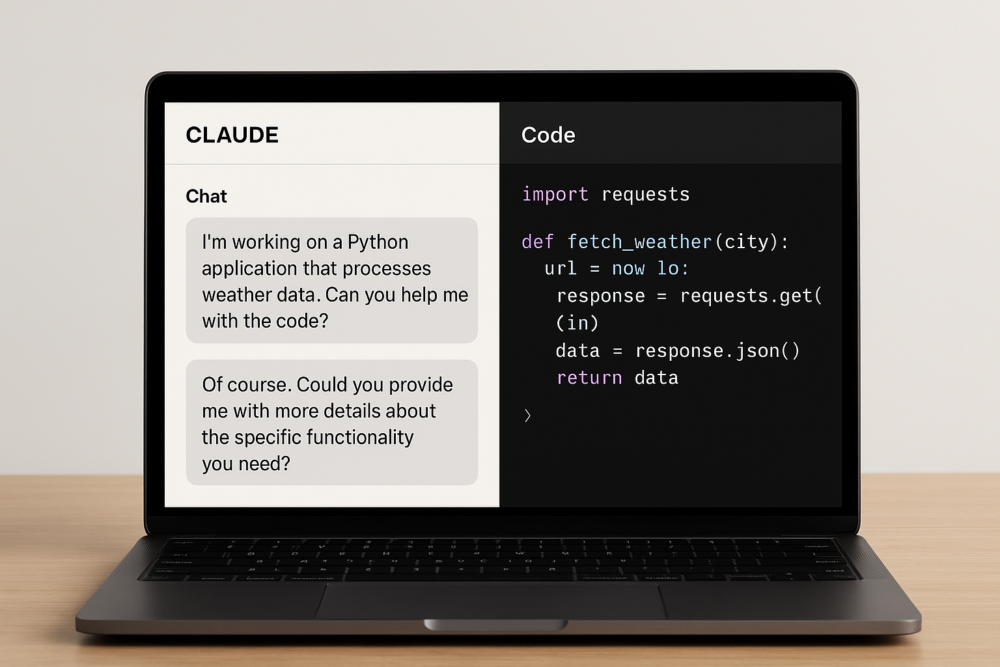

In some cases this could help match user needs for output quality and cost efficiency. Claude Code also landed in the desktop apps, so programmers can now access the same integrated coding interface without resorting to a web browser or command line tools.

Read more: QCAD Canadian stablecoin launches with full regulatory clearance

Read more: Anthropic bets big on domestic AI leadership with $50B U.S. expansion

Developers praise the lower cost

The update arrives alongside a major API price drop, which may lower barriers for small developers building custom applications for chat or code services. Anthropic cut input token pricing from USD$15 down to USD$5 per million tokens. Likewise it reduced output pricing from USD$75 to USD$25. That shift lowers API costs roughly by two thirds. Developers praised the lower cost as a chance to embed Claude in more tools.

Many app users should also benefit from longer, more fluid chats. Before, sessions often ended suddenly when conversations hit the 200,000 token limit. Now the system compresses context instead of stopping mid-sentence. In addition, the smoother flow may help users stay focused on tasks rather than restarts. Early users said conversation threads felt smoother and less prone to abrupt closures.

Anthropic released a blog post giving sample token saving examples that show how it retains essential context while reducing output verbosity, which may appeal to those concerned about bandwidth or usage quotas.

Developers can adopt the same context management techniques via the API and build custom tools with persistent memory and efficient token use. Furthermore, they can choose effort levels and integrate memory compaction in custom tools. The result should yield lower cost and better user interaction across Claude apps.

.